Meta’s AI-Enabled Interview: Everything You Need to Know

Introduction

The landscape of technical interviewing is undergoing a seismic shift. Meta has officially rolled out its AI-Enabled Coding Round, a 60-minute interview designed to simulate a modern developer environment. This isn’t just another LeetCode round; it’s a transition toward “real-world” engineering.

If you have a Meta interview coming up, you need to understand that the “Meta-tagged LeetCode” strategy is no longer enough. Based on the latest insights from recent candidates and engineering insiders, here is everything you need to know to prepare for this new format.

Who is this for? Currently, it’s for SWE (Software Engineer) and M1 (Engineering Manager) roles, primarily at the E4 and E5 levels.

What Exactly is the AI-Enabled Coding Round ?

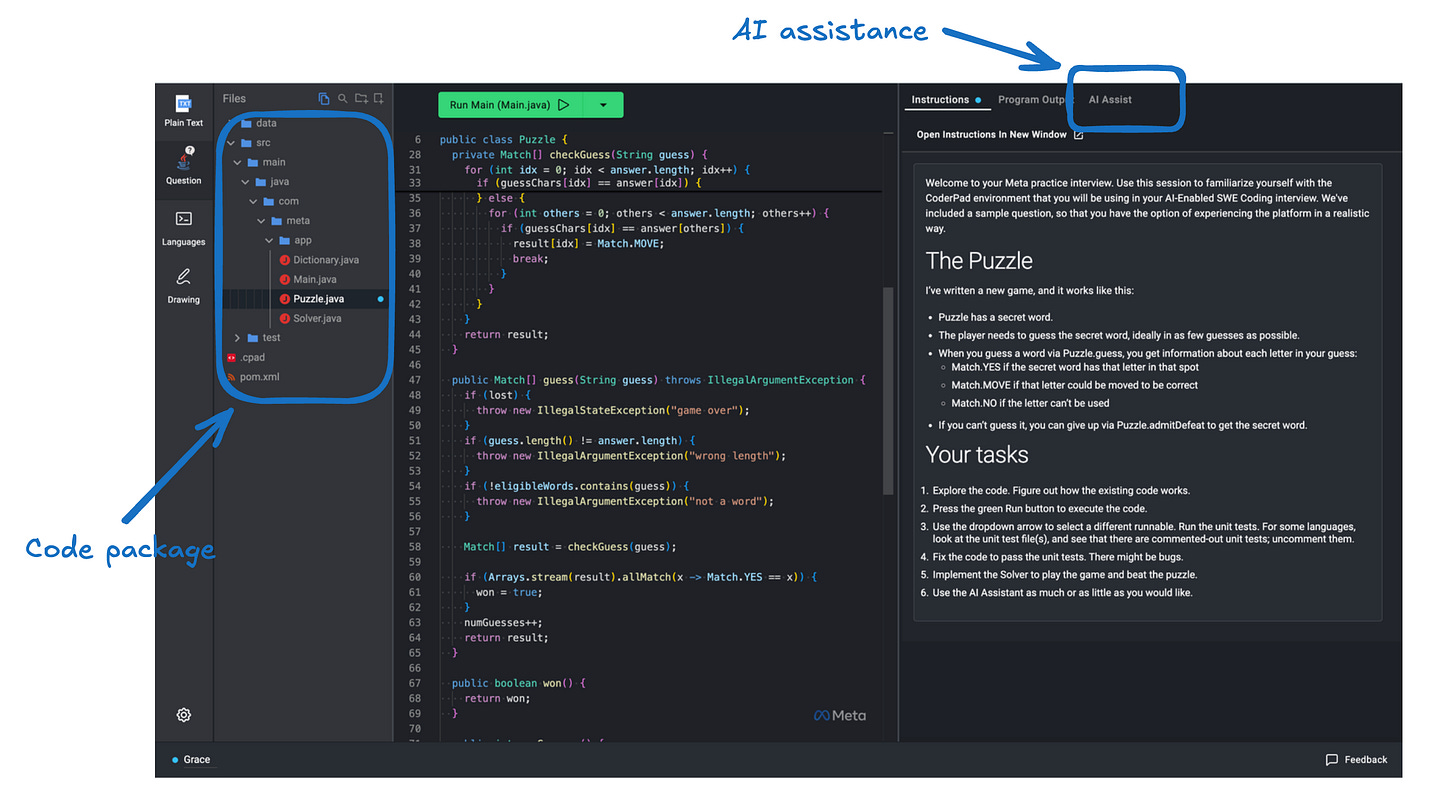

Meta has replaced one of its two traditional onsite coding rounds with this new AI-assisted format. While one round remains the classic algorithm-focused session (no AI), this new 60-minute session provides you with a CoderPad environment equipped with an AI assistant, similar to Cursor or GitHub Copilot.

The Logistics:

Duration: 60 minutes.

Environment: CoderPad with a built-in AI chat window.

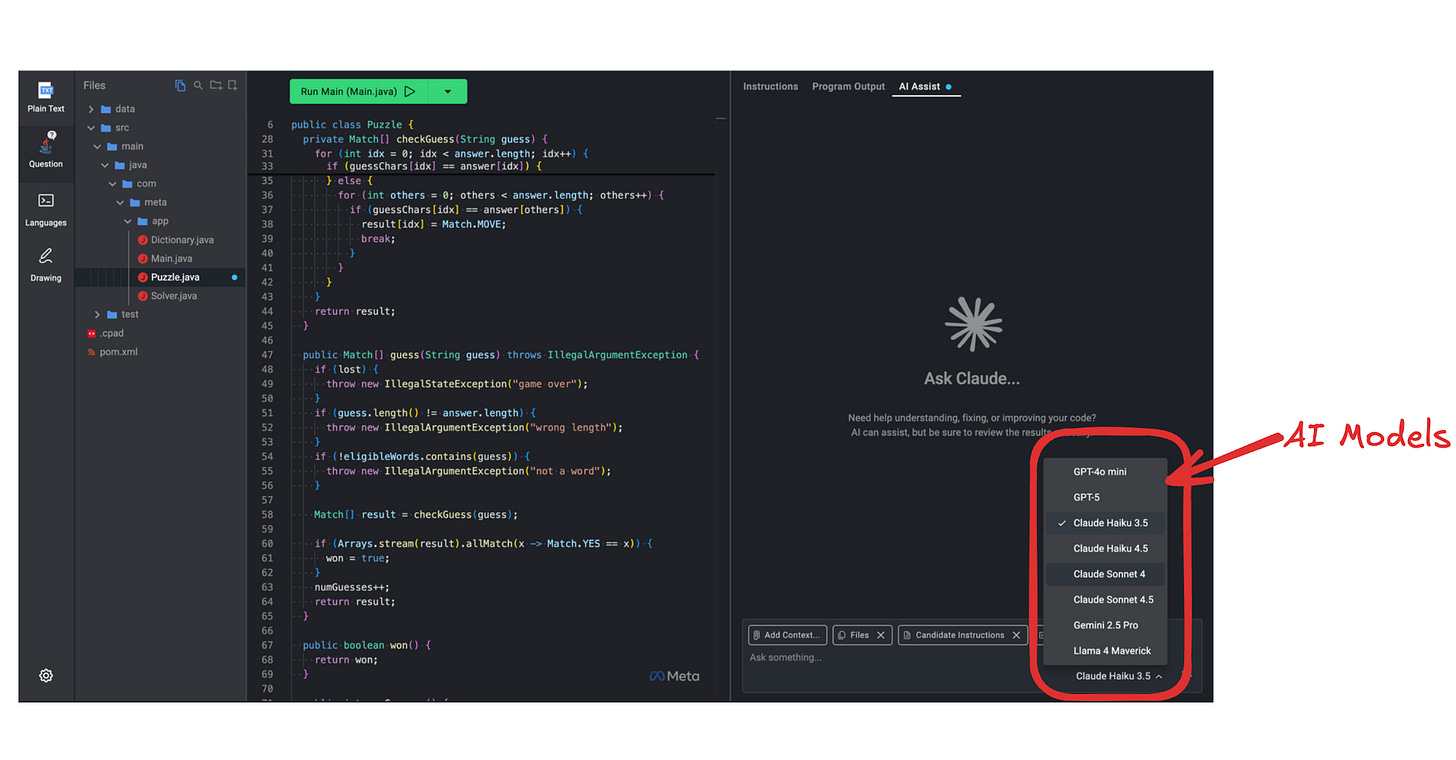

AI Models: You often have a “model switcher” allowing you to choose between several LLMs. Models include GPT-4o mini (fast, good for boilerplate), GPT-5,

Claude Haiku (3.5,4.5)/ Sonnet(4, 4.5) (widely regarded as the strongest for coding), Gemini- 2.5 Pro and Llama 4 Maverick (Meta’s own model).

The Shift: Projects Over Puzzles

In the traditional round, you’d solve two disconnected algorithm problems. In the AI round, you tackle one thematic project with multiple checkpoints or “parts.”

Instead of a single function, you might be given a mini multi-file codebase. This often includes:

Multiple

codefiles.A data and configuration file.

Existing test suites

Previously Asked Problems

Building from Scratch:Implementing a feature or utility based on a specific set of requirements. This tests your ability to translate a JIRA-style ticket into code.

Hangman / Word-Guessing Game: Implementing the core game loop. You must write the logic to:

Accept a secret word and reveal blanks.

Validate user input (checking if it’s a single letter).

Update the display and track “lives” left.

Maximise Unique Characters: Given a list of words, build a function to find a subset that maximise the number of unique characters without exceeding a total character count. (This is the “LeetCode-style” part of the AI round).

Filesystem Snapshot Analyser: Building a utility from scratch that takes two directory “snapshots” (lists of file metadata) and outputs the diff (Added, Deleted, or Modified files).

Chatroom Message Processor: Writing a “Sanitisation” module that identifies and masks sensitive information (like phone numbers or emails) using regex or string manipulation before the message is “sent.”

Extending Existing Code: Navigating an unfamiliar codebase and adding new functionality. This is usually the “Level 2” or “Level 3” of the interview task.

Maze Solver (Portals/Keys): After fixing the basic maze, you are asked to modify the code to support “Portals” (teleporting from point A to B) or “Locked Doors” (requiring you to find a key before reaching the exit). This requires changing the state in your BFS.

Card Game (Multiplayer Support): Refactoring a single-player card game to support $N$ players. You have to create a

Playerclass and update theGamemanager to track state across multiple hands.Grid Sum to 15 (Clear the Grid): You have a grid-based game. The requirement is extended: instead of just finding a sum of 15 in a row, you must now find it in any “L-shape” or contiguous cluster, requiring a refactor of the search logic.

Ads Analytics (Real-time Windowing): Extending the analytics engine to handle “Sliding Windows” (e.g., clicks in the last 60 seconds) rather than static 24-hour buckets.

Debugging: Finding and fixing bugs in a broken implementation under time pressure. This is usually the “Part 1” of your interview to get the codebase running.

Maze Solver (Fix the Maze): You are given a DFS/BFS implementation that is failing tests. Common bugs include: missing a

visitedset (causing infinite loops), incorrect coordinate handling (row/column swap), or failing to return the path correctly.Card Game (Aces Logic): A card game codebase where the

calculateScoremethod is broken—it treats Aces as a fixed value (1) instead of the dynamic 1 or 11 required for Blackjack-style logic.Ads Analytics Engine (Data Parsing): You are given a utility that parses JSON/CSV logs of ad clicks. The unit tests are failing because of a “one-off error” in the time-window aggregation or a failure to handle null values in the “User ID” field.

Email Inbox (Filtering): A pre-written script that filters emails by “Read/Unread” status is failing to identify the latest email due to a sorting bug in the custom comparator.

What is Meta Evaluating ?

Meta evaluates you on four core pillars. Notably, “Prompt Engineering” is not one of them. The AI is a tool, not the subject.

Problem Solving: Can you clarify requirements and break down a complex project into manageable parts?

Code Development & Understanding: Can you navigate and build upon existing structures? Does your code work as intended when executed?

Verification & Debugging: This is critical. You are expected to use unit tests, handle edge cases (empty inputs, large values), and fix regressions.

Technical Communication: You must explain your reasoning, justify why you are using (or ignoring) AI suggestions, and collaborate with your interviewer.

How to Use the AI Strategically ?

A guaranteed way to fail is to “prompt your way to success.” If you ask the AI to “solve the whole problem” and blindly paste the result, you will likely be rejected for lacking “Code Understanding.”

The Right Way to Use AI:

Boilerplate & Scaffolding: Use it to generate repetitive code, class skeletons, or initial test cases.

Heavy Typing: Let it write out long dictionaries or data structures.

Debugging Assistance: If a test is failing, you can ask the AI to help interpret the error or suggest where the logic might be flawed.

Pipelining Your Workflow: While the AI is generating code, don’t just stare at the screen. Use that time to explain your high-level approach or complexity analysis to the interviewer.

What to Avoid:

Giant Code Dumps: Don’t request 100+ lines at once. It’s impossible to verify quickly.

Skipping Tests: Never “eyeball” the code. Run the tests.

Ignoring Regressions: When you fix one part, make sure you haven’t broken the previous parts.

Blind Trust: AI models hallucinate or provide suboptimal “vibe-code.” You are the senior engineer; the AI is your junior pair-partner.

Practical Tips and “Gotchas”

CoderPad Quirks: The output panel in CoderPad does not always clear automatically. If you scroll up to read an old error, it might not auto-scroll down to the new output. Always double-check you are looking at the most recent run.

Brush Up on Testing Frameworks: You will be expected to read and run unit tests.

Python:

unittestC++:

GoogleTest (gtest)Java:

JUnit

Language Choice: Usually limited to Python, Java, C++, C#, and TypeScript.

Time Management: 60 minutes feels like a lot, but navigating a multi-file project and debugging takes more time than a standard LeetCode function.

How to Prepare ?

The Practice Environment: Once you are in the “full loop” for an onsite, Meta provides a practice CoderPad link (often featuring a “Wordle Solver” or similar problem). Use this. Get familiar with the AI chat toggle and the model switcher.

Focus on Design Problems: Practice LeetCode’s “Design” section (e.g., Design Twitter, Design File System). These require managing state across multiple methods, which is closer to the project-based feel of the AI round.

Simulate Extension: Take a problem you solved yesterday and try to add a “Part 2” or “Part 3” to it today. How would you refactor your old code to support a new requirement?

Review AI Code: Practice taking code generated by ChatGPT or Claude and looking for 3 things it got wrong (e.g., edge cases, performance, or readability).

Final Thought

Meta’s shift suggests they value engineering productivity over algorithmic memorisation. They want to see how you work when you have the world’s best tools at your fingertips. If you can lead the AI, verify its output rigorously, and communicate your design clearly, you’ll excel in this new era of interviewing.

Good luck—and remember, you are the pilot, the AI is just the co-pilot.

AI interviews are the new normal. The real test isn't fooling the AI—it's showing up prepared and authentic.

Thanks Ravi, seems like we write in the same domain. Can we connect ?